Hello everyone,

In this post, I will tell you how I wrote a Python module + CLI script to fetch proxies from free proxy listing sites.

I am a part-time freelancer and I get tons of projects related to data scraping such as scraping Instagram posts, scraping Udemy course details, scraping leads from LinkedIn, etc.

Now obviously, fetching contents from such sites in bulk in a short interval of time from the same IP will result in blocking your IP address.

Thus, in order to get contents from such sites without getting blocked, I use multiple solutions such as –

- Using Proxies

- Using random User-Agent with each request

- Adding sleep interval between each request

- Using referrer, authority, etc headers in the request

In this post, I will just talk about Proxies.

I use free proxies or paid proxies based upon the client’s demand and requirement.

Paid proxies can be easily added in a list or array or file and can be used within the script.

But for using free proxies –

Firstly, I would need to find free proxy listing sites, then I would have to create a script to scrape proxies from there.

Then, I would have to create a function to check whether that proxy is working or not. Then, I will create a list or array to add only the working proxies into it.

Now, doing the above steps each time for each new script or project is very tiring and boring.

Thus, a few days ago I got an idea to write a Python module for that purpose which can also be used as Command Line Utility to fetch the free proxies.

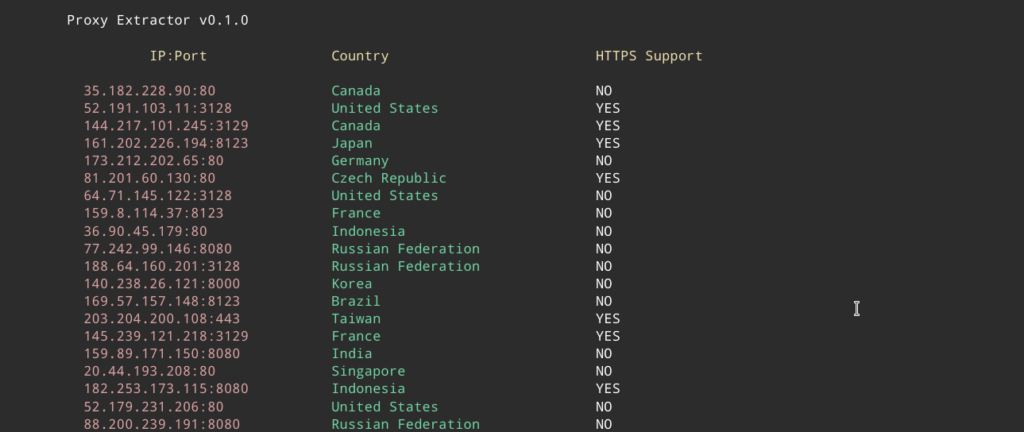

Proxy Extractor

Now, let’s dive into its code and see how I wrote that module + CLI utility –

1. proxy_extractor/__init__.py

Created this file to make Python treat this directory as a package.

2. proxy_extractor/useragents.py

I created this file to store the list of User-Agents so that with each request a random user-agent can be used.

I wrote a function rand_user_agent to pick random user-agent from that list using the random module.

3. proxy_extractor/requester.py

This file contains a function make_request to send an asynchronous request to the URL passed in that method.

To make an asynchronous request, I used aiohttp module.

I also used function rand_user_agent from useragents.py to pick a random user-agent and pass with the request made by the make_request function.

4. proxy_extractor/scraper.py

This file contains a function _extract_proxies_free_proxy_list_net to parse the HTML content requested from free-proxy-list with the help of bs4 module.

5. proxy_extractor/checker.py

This file contains a async function proxy_check to check whether the passed proxy is working or not by aiohttp module and a random user-agent from the useragents.py with a timeout limit (default: 50 seconds).

**You can check out this post in order to understand the basics about async, await, asyncio module.

6. proxy_extractor/extractor.py

This file contains several coroutines (async functions) –

AsyncIter class(items) –

This class takes a list as an input and returns an async list which can be used with async for loops.

extract_proxies(https, proxy_count) –

This async function fetches the HTML content from free-proxy-list using the make_request function of requester.py file and then that HTML content is parsed by the _extract_proxies_free_proxy_list_net function of scraper.py and it returns a parsed list consisting of IP addresses, their countries, their port, https support, or not, etc.

get_extracted_proxies(proxy_list) –

This function takes an async list and yields each element.

proxy_remove(proxy, proxy_count, timeout) –

This function checks the passed proxy is working or not and only adds a working proxy to a working_proxy_list list.

create_jobs(proxy_list, https, proxy_count, timeout) –

This function adds each proxy element yielded from the get_extracted_proxies function to a tasks list.

extract_proxy(https=False, proxy_count=100, timeout=50) –

This function firstly extracts the proxies using the extract_proxies function and then creates a new_event_loop with the use of asyncio module.

*An event loop of asyncio runs asynchronous tasks and callbacks.

Then, I used the set_event_loop method of asyncio to set the newly created loop as the current loop.

Then, that extracted proxy list is passed to the AsyncIter class to get an async list of proxies. After that, I used run_until_complete method of asyncio to run the create_jobs function until its completion.

At last, it returns a list of working proxies.

7. color.py

I used this file to assign the color code values based on the operating system a user is using.

8. cli.py

Using this file, one can use this Python module as a Command-Line utility. I have used argparse module for parsing the command arguments. It calls the extract_proxy function of proxy_extractor/extractor.py file.

Those were the code files used to build this Python module + CLI utility. You can check it out here and can also read about its installation and usage.

Feel free to contribute to this project.

I hope you got some insights into my module.

Got a question or suggestion? Comment below.

Thank you so much for reading this post.

Great site you’ve got here.. It’s difficult to find good quality writing like yours

these days. I truly appreciate individuals like you!

Take care!! https://vanzari-parbrize.ro/parbrize/parbrize-audi.html

Hey! Quick question that’s completely off topic.

Do you know how to make your sife mbile friendly?

My site looks weird when viewing from my iphone 4.

I’m trying to find a theme or plugin that

might be able to ffix thi issue. If you havce any suggestions, please share.

Appreciate it!

It is appropriate time to make some plans for the future and it’s time to be happy.

I have read this post and if I could I wish to suggest you some interesting things or tips.

Perhaps you can write next articles referring to this article.

I desire to read more things about it! I’ll right away grasp your rss as I can’t

in finding your e-mail subscription hyperlink or newsletter service.

Do you have any? Please let me know so that I could subscribe.

Thanks. Whoa! This blog looks just like my old one! It’s on a entirely different subject but

it has pretty much the same layout and design. Wonderful choice of colors!

http://linux.com

Feel free to surf to my web site – Jim

Thank you so much. That means a lot to me.

Link to the feed – https://ganofins.com/blog/feed/

or you can also subscribe to the newsletter (you can find that on the blog).

Hi! Ӏ know tһiѕ iѕ someԝhat off topic bսt I was wondering which blog platform are you uѕing

for tһiѕ website? I’m gettіng tired of WordPress becausе I’ѵe haɗ issues with hackers and I’m

ⅼooking at options for another platform. I would be

great if yоu could point me in the direction ߋf a ɡood platform.

You can try ghost cms. I am using wordpress